My First Nomad Cluster

My own notes — not a guide — on standing up my first Nomad cluster on AWS.

Kevin Wang

It’s a chilly 35º F morning here in New York.

It has certainly been a minute since I last wrote anything. Work has been quite busy, and life has been, well... turbulent to say the least. But I’m nearly fully back in the regular swing of things.

This post is going to be about Nomad. Not the why, or the what, but rather the how. More specifically, how I went about standing up my first Nomad cluster on AWS.

This is meaningful, personally, because I have a grand goal of understanding and using all 8 of HashiCorp’s core products.

My progress thus far looks like...

- Waypoint: Contributor —

I’ve contributed several

AWS Lambda,K8splugin improvements to the project, created thelambda-function-urlplugin, and am working on aapp-runnerplugin... which I should finish up 😅. - Terraform: User — I use Terraform semi-regularly on my team at HashiCorp for the usual infrastructure provisioning. I also created a module that manages a set of nearly identical GitHub files across all these 👆👇 core product repositories.

- Nomad: Dabbler — I’ve stood up a production cluster, and run a handful of containerized jobs.

- Consul: Dabbler — I’ve dabbled with the native integration with Nomad. However, I wouldn’t be able to explain why or when you would use Consul.

- Vault: Dabbler — Vault is next on my list to learn. I’ve only interacted with a local dev server.

- Boundary: Never touched — Don’t know what it does.

- Packer: Never touched — Should look into this for EC2 AMI creation.

- Vagrant: Never touched — Don’t know what it does.

Now, on to the actual write up.

Nomad

I wanted to create a production Nomad cluster. No, not

nomad agent -dev. A production cluster with multiple nodes

— the whole shebang with a leader and followers.

Prior to this, my only experience with clusters has been with Kubernetes. And in those situations, the underlying nodes were always abstracted away from me by the platform, whether it was EKS or GKE. I never once had to think about leaders or followers, just declarative YAML files for creating pods.

Checklist

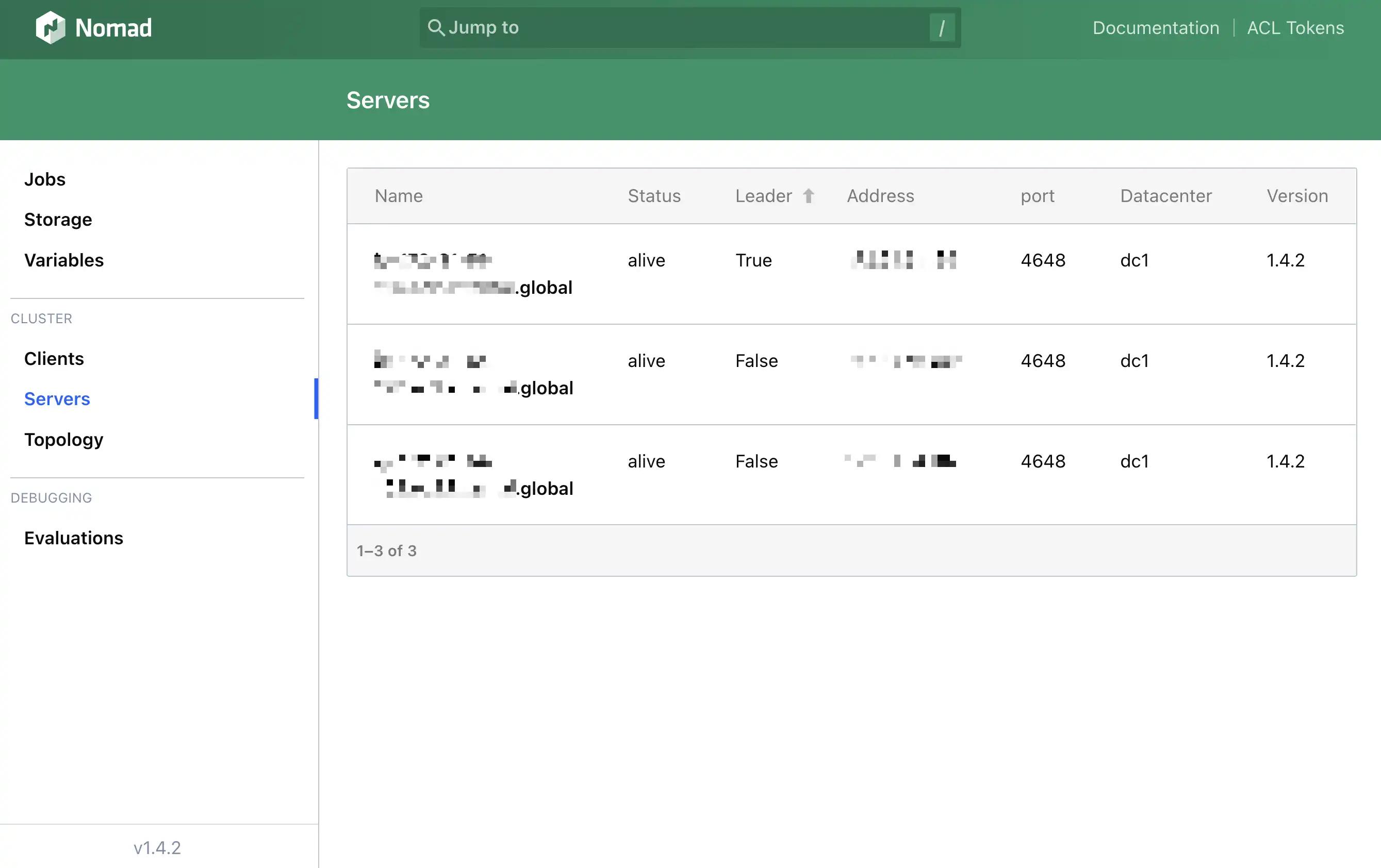

My criteria for a fully qualified production cluster was simply:

- Must have 3 server nodes in

alivestatus, with one leader - Should be publicly accessible

- Should be reasonably secure

- Should be able to run a containerized job

I’ll reference this checklist throughout the rest of the post.

Initial Wall

The first resource I reached for was this Nomad tutorial, but I quickly turned away due to the hefty prerequisites.

This particular tutorial called for Packer, Terraform, and Consul usage, to which I thought with a ton of skepticism, “Wtf? It cannot possibly require all these additional tools just to create a Nomad cluster...” That’s like looking for the quickest spaghetti recipe and finding one that requires you to make the pasta and sauce from scratch.

I eventually pulled from various scattered documentation pages and embarked on some good ol’ AWS click-ops. The process that ensued was ultimately what drove me to write this post.

Cluster Configuration

My very surface-level knowledge of clusters and the fact that Nomad is meant to be run as a cluster lead me to create 3 EC2 instances, and then pray that Nomad would magically join them somehow.

Clusters typically call for an odd number of members which helps with fault tolerance and preventing the “split brain” problem. 1

3 Little EC2 Piggies

Nomad suggests using fairly powerful specs

for Nomad servers. However, for my discovery needs, the

cheap t2.small instance size worked just fine.

I created three of these Amazon Linux EC2 instances,

SSH’d into each and did some repetitive manual setup,

such as installing nomad itself and zsh for productivity.

Nomad Config Files

Nomad looks for a config file at /etc/nomad.d/nomad.hcl by

default. These are the configurations that I arrived on for my

leader and followers.

Running

At this point I was ready to run Nomad.

This enterprise guide was pretty

helpful with showing how to run Nomad as a background service using

systemd/systemctl.

There are some chmod commands for permitting file/folder

modifications but I don’t remember if they're needed or not.

UI

The Nomad web UI — viewable at port 4646 — was my next stop

and the quickest way to validate cluster health.

Check ✅ — I had created my first, fully functional production

Nomad cluster, reachable at a public URL like http://<ec2-public-ip>:4646/ui/jobs.

Must have 3 server nodes inalivestatus, with one leaderShould be publicly accessible- Should be reasonably secure

- Should be able to run a containerized job

Basic Auth

Nomad, including the UI, is not secure by default, unlike say Waypoint and its UI.

Without diving into fine-grained access control, I wanted basic authentication to be required for all requests to my cluster. This was to keep out any trolls or bad actors.

My leader config file with an additional acl stanza:

I restarted the processs — sudo systemctl restart nomad —

and ran nomad acl bootstrap to generate a basic token for

authenicated CLI and UI requests.

Note

Pass the Secret ID to either the UI or set it as an environment variable for the CLI.

Now my cluster was reasonably secure. Time to schedule a job.

Must have 3 server nodes inalivestatus, with one leaderShould be publicly accessibleShould be reasonably secure- Should be able to run a containerized job

Jobs

The most mind-blowing thing I’ve witnessed with Nomad is the spawning of minecarts in Minecraft.

But I simply wanted to run a Docker container, similar to Kubernetes’ main use case.

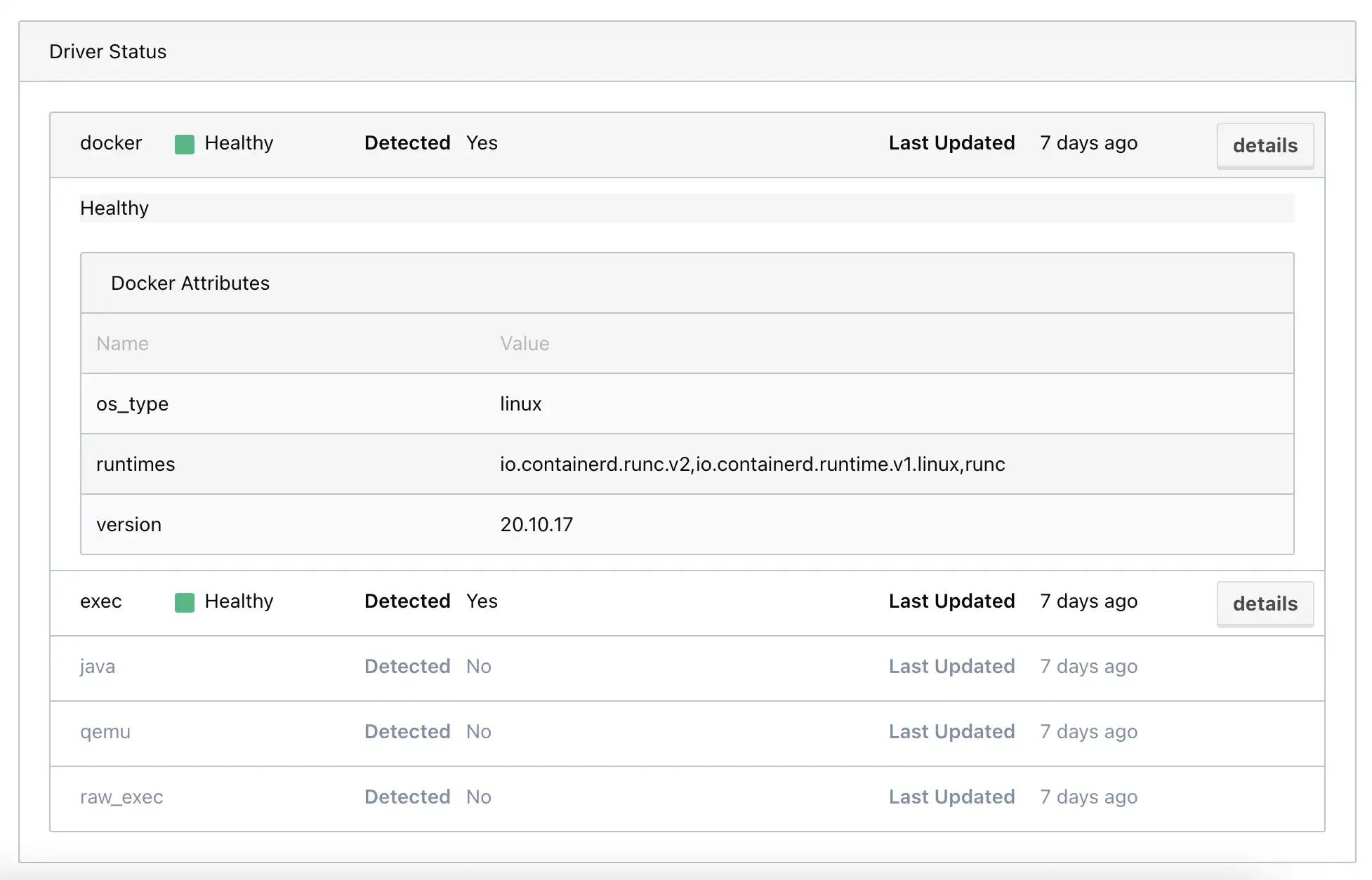

Docker Driver

The machines that Nomad runs on must have Docker installed

for the docker driver to work.

After this is done, the UI should show the Docker driver

status as Healthy.

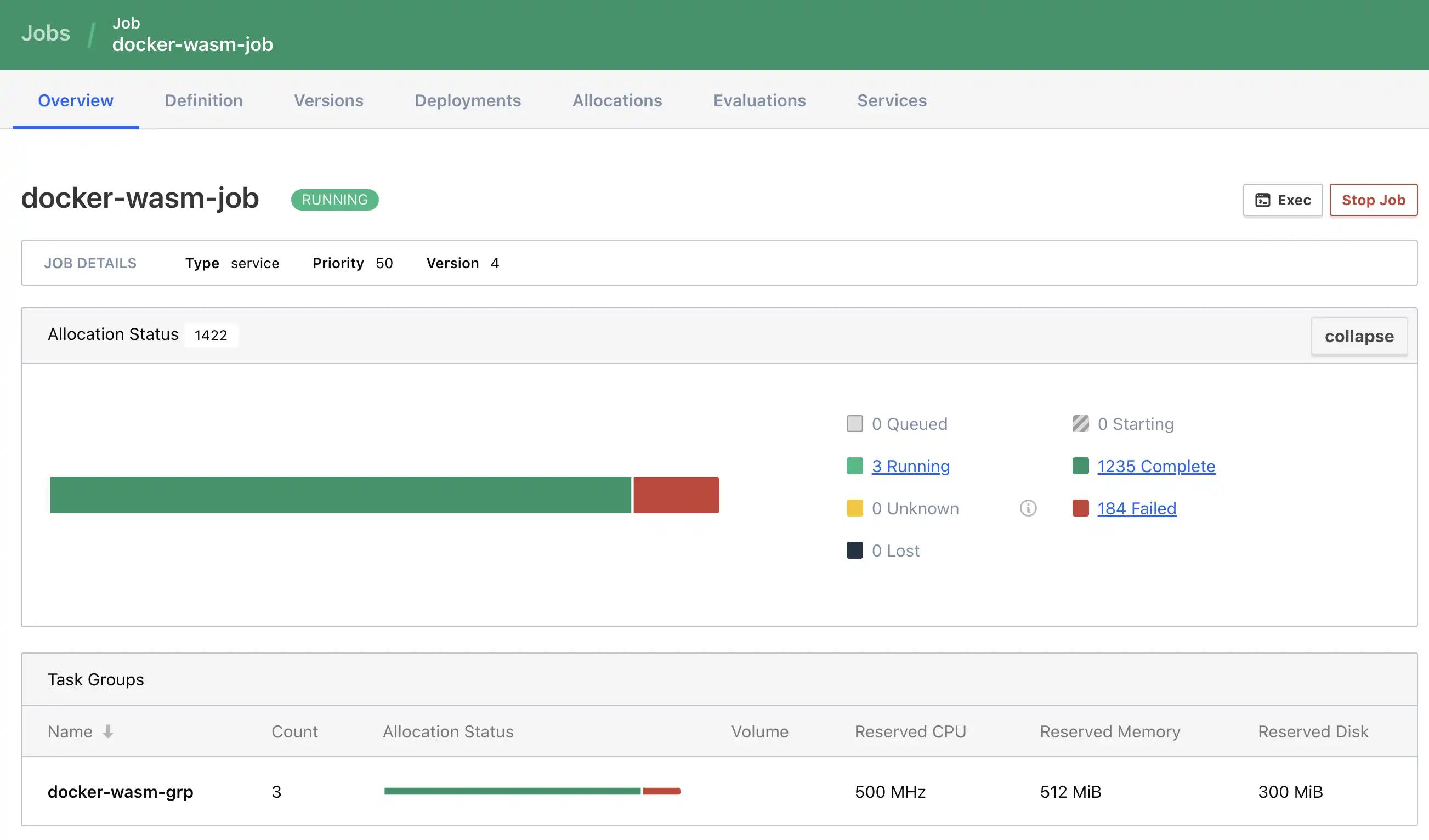

Scheduling a Container

I had a Docker image from a previous project handy, which I wanted to with Nomad

Note: Image info here

Nomad has a handy nomad job init to generate a job file,

whose HCL stanzas definitely come with a bit of a learning curve.

I trimmed the sample file down to the following:

This then gets run with nomad job run docker-wasm.nomad.

Voila! — It was working!

Mission complete!

Must have 3 server nodes inalivestatus, with one leaderShould be publicly accessibleShould be reasonably secureShould be able to run a containerized job

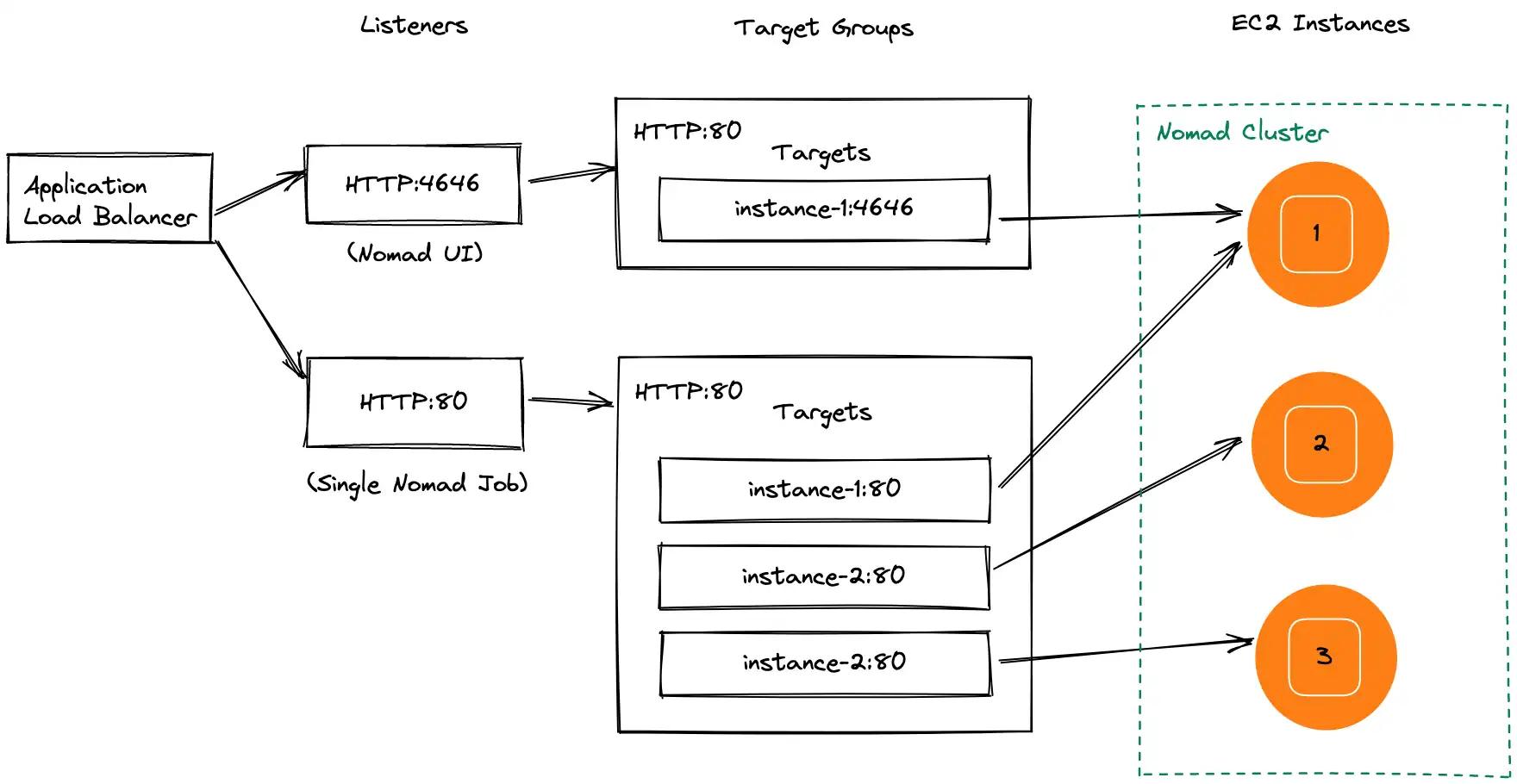

AWS

I was largely complete with my original goal... and inevitably went on to do some additional hardening of my cluster setup, like fronting my cluster with a load balancer. This was mostly so I could share a URL with my coworkers that wasn’t an IP address of a EC2 instance.

This is what the final architecture looked like:

Retrospective

After having gone through the end-to-end flow, I thought about some things that I could have done differently, or could improve moving forward.

Ok, packer

Could I use Packer to build an AMI with Nomad, ZSH, and Docker already installed? I guess it’s time to use Packer...

Ok, terraform

All the click-ops — not to mention the eventual tear down — I went through could be replaced by Terraform infrastructure-as-code.

This would be a must for a team setting.

No Public IPs

Given that my load balancer has a public URL, I could omit public IP’s for all the EC2 instances. This is only adjustable at the time of instance creation though.

This would also help narrow down the inbound rules on my security group.

ARM is Cheaper than x86

I had initially chosen x86 for my EC2 architecture because

I didn’t want run into any potentially obscure issues with

the newer ARM architecture.

In the past, I’ve run into a “fun” bug with running an

ARMDocker container on AWS AppRunner. AppRunner only supportsx86and would hang for up to 10 minutes only to eventually fail with an “exec format error” error.

A quick cost comparison showed that ARM instance types are

slightly cheaper than x86, and Nomad supports both so it

probably would have been fine.

| Instance Type | Arch | vCPU | Memory (GiB) | Price (USD) |

|---|---|---|---|---|

| t4g.small | ARM | 2 | 2 | 0.0168 |

| t2.small | x86 | 1 | 2 | 0.0230 |

I believe because ARM is more energy-efficient than x86, AWS is

charging you less for essentially less electricity used... 🤷♂️

Raft Voter Rejection

There is this seemingly inconsequential yet non-stop warning in the Nomad logs that I want to figure out the root case of: